- Research

- Open access

- Published:

Patient reported measures of continuity of care and health outcomes: a systematic review

BMC Primary Care volume 25, Article number: 309 (2024)

Abstract

Background

There is a considerable amount of research showing an association between continuity of care and improved health outcomes. However, the methods used in most studies examine only the pattern of interactions between patients and clinicians through administrative measures of continuity. The patient experience of continuity can also be measured by using patient reported experience measures. Unlike administrative measures, these can allow elements of continuity such as the presence of information or how joined up care is between providers to be measured. Patient experienced continuity is a marker of healthcare quality in its own right. However, it is unclear if, like administrative measures, patient reported continuity is also linked to positive health outcomes.

Methods

Cohort and interventional studies that examined the relationship between patient reported continuity of care and a health outcome were eligible for inclusion. Medline, EMBASE, CINAHL and the Cochrane Library were searched in April 2021. Citation searching of published continuity measures was also performed. QUIP and Cochrane risk of bias tools were used to assess study quality. A box-score method was used for study synthesis.

Results

Nineteen studies were eligible for inclusion. 15 studies measured continuity using a validated, multifactorial questionnaire or the continuity/co-ordination subscale of another instrument. Two studies placed patients into discrete groups of continuity based on pre-defined questions, one used a bespoke questionnaire, one calculated an administrative measure of continuity using patient reported data. Outcome measures examined were quality of life (n = 11), self-reported health status (n = 8), emergency department use or hospitalisation (n = 7), indicators of function or wellbeing (n = 6), mortality (n = 4) and physiological measures (n = 2). Analysis was limited by the relatively small number of hetrogenous studies. The majority of studies showed a link between at least one measure of continuity and one health outcome.

Conclusion

Whilst there is emerging evidence of a link between patient reported continuity and several outcomes, the evidence is not as strong as that for administrative measures of continuity. This may be because administrative measures record something different to patient reported measures, or that studies using patient reported measures are smaller and less able to detect smaller effects. Future research should use larger sample sizes to clarify if a link does exist and what the potential mechanisms underlying such a link could be. When measuring continuity, researchers and health system administrators should carefully consider what type of continuity measure is most appropriate.

Introduction

Continuity of primary care is associated with multiple positive outcomes including reduced hospitals admissions, lower costs and a reduction in mortality [1,2,3]. Providing continuity is often seen as opposed to providing rapid access to appointments [4] and many health systems have chosen to focus primary care policy on access rather than continuity [5,6,7]. Continuity has fallen in several primary care systems and this has led to calls to improve it [8, 9]. However, it is sometimes unclear exactly what continuity is and what should be improved.

In its most basic form, continuity of care can be defined as a continuous relationship between a patient and a healthcare professional [10]. However, from the patient perspective, continuity of care can also be experienced as joined up seamless care from multiple providers [11].

One of the most commonly cited models of continuity by Haggerty et al. defines continuity as

“…the degree to which a series of discrete healthcare events is experienced as coherent and connected and consistent with the patient’s medical needs and personal context. Continuity of care is distinguished from other attributes of care by two core elements—care over time and the focus on individual patients” [11].

It then breaks continuity down into three parts (see Table 1) [11]. Other academic models of patient continuity exists but they contain elements which are broadly analogous [10, 12,13,14].

Continuity can be measured through administrative measures or by asking patients about their experience of continuity [16]. Administrative mesures are commonly used as they allow continuity to be calculated easily for large numbers of patient consultations. Administraive measures capture one element of continuity – the frequency or pattern of professionals seen by a patient [16, 17]. There are multiple studies and several systematic reviews showing that better health outcomes are associated with administrative measures of continuity of care [1, 2, 18, 19]. One of the most recent of these reviews used a box-score method to assess the relationship between reduced mortality and continuity (i.e., counting the numbers of studies reporting significant and non-significant relationships) [18]. The review examined thirteen studies and found a positive association in nine. Administrative measures of continuity cannot capture aspects of continuity such as informational or management continuity or the nature of the relationship between the patient and clinicians. To address this, there have been several patient-reported experience measures (PREMs) of continuity developed that attempt to capture the patient experience of continuity beyond the pattern in which they see particular clinicians [14, 17, 20, 21]. Studies have shown a variable correlation between administrative and patient reported measures of continity and their relationship to health outcomes [22]. Pearson correlation co-efficients vary between 0.11 and 0.87 depending on what is measured and how [23, 24]. This suggests that they are capturing different things and that both measures have their uses and drawbacks [23, 25]. Patients may have good administrative measures of continuity but report a poor experience. Conversely, administrative measures of continuity may be poor, but a patient may report a high level of experienced continuity. Patient experienced continuity and patient satisfaction with healthcare is an aim in its own right in many healthcare systems [26]. Whilst this is laudable, it may be unclear to policy makers if prioritising patient-experienced continuity will improve health outcomes.

This review seeks to answer two questions.

-

1)

Is patient reported continuity of care associated with positive health outcomes?

-

2)

Are particular types of patient reported continuity (relational, informational or management) associated with positive health outcomes?

Methods

A review protocol was registered with PROSPERO in June 2021 (ID: CRD42021246606).

Search strategy

A structured search was undertaken using appropriate search terms on Medline, EMBASE, CINAHL and the Cochrane Library in April 2021 (see Appendix). The searches were limited to the last 20 years. This age limitation reflects the period in which the more holistic description of continuity (as exemplified by Haggerty et al. 2003) became more prominent. In addition to database searches, existing reviews of PREMs of continuity and co-ordination were searched for appropriate measures. Citation searching of these measures was then undertaken to locate studies that used these outcome measures.

Inclusion criteria

Full text papers were reviewed if the title or abstract suggested that the paper measured (a) continuity through a PREM and (b) a health outcome. Health outcomes were defined as outcomes that measured a direct effect on patient health (e.g., health status) or patient use of emergency or inpatient care. Papers with outcomes relating to patient satisfaction or satisfaction with a particular service were excluded as were process measures (such as quality of documentation, cost to health care provider). Cohort and interventional studies were eligible for inclusion, if they reported data on the relationship between continuity and a relevant health outcome. Cross-sectional studies were excluded because of the risk of recall bias [27].

The majority of participants in a study had to be aged over 16, based in a healthcare setting and receiving healthcare from healthcare professionals (medical or non-medical). We felt that patients under 16 were unlikely to be asked to fill out continuity PREMs. Studies that used PREMs to quantitatively measure one or more elements of experienced continuity of care or coordination were eligible for inclusion [11]. Any PREMs that could map to one or more of the three key elements of Haggerty’s definition (Table 1) definition were eligible for inclusion. The types of continuity measured by each study were mapped to the Haggerty concepts of continuity by at least two reviewers independently. Our search also included patient reported measures of co-ordination, as a previous review of continuity PREMs highlighted the conceptual overlap between patient experienced continuity and some measures of patient experienced co-ordination [17]. Whilst there are different definitions of co-ordination, the concept of patient perceived co-ordination is arguably the same as management continuity [13, 14, 28]. Patient reported measures of care co-ordination were reviewed by two reviewers to see whether they measured the concept of management continuity. Because of the overlap between concepts of continuity and other theories (e.g., patient-centred care, quality of care), in studies where it was not clear that continuity was being measured, agreement, with documented reasons, was made about their inclusion/exclusion after discussion between three of the reviewers (PB, SS and AW). Disagreements were resolved by documented group discussion. Some PREMs measured concepts of continuity alongside other concepts such as access. These studies were eligible for inclusion only if measurements of continuity were reported and analysed separately.

Data abstraction

All titles/abstracts were initially screened by one reviewer (PB). 20% of the abstracts were independently reviewed by 2 other reviewers (SS and AW), blinded to the results of the initial screening. All full text reviews were done by two blinded reviewers independently. Disagreements were resolved by group discussion between PB, SS, AW and PBo. Excel was used for collation of search results, titles, and abstracts. Rayyan was used in the full text review process.

Data extraction was performed independently by two reviewers. The following data were extracted to an Excel spreadsheet: study design, setting, participant inclusion criteria, method of measurement of continuity, type of continuity measured, outcomes analysed, temporal relationship of continuity to outcomes in the study, co-variates, and quantitative data for continuity measures and outcomes. Disagreements were resolved by documented discussion or involvement of a third reviewer.

Study risk of bias assessment

Cohort studies were assessed for risk of bias at a study level using the QUIP tool by two reviewers acting independently [29]. Trials were assessed using the Cochrane risk of bias tool. The use of the QUIP tool was a deviation from the review protocol as the Ottowa-Newcastle tool in the protocol was less suitable for use on the type of cohort studies returned in the search. Any disagreements in rating were resolved by documented discussion.

Analysis

As outlined in our original protocol, our preferred analysis strategy was to perform meta-analysis. However, we were unable to do this as insufficient numbers of studies reported data amenable to the calculation of an effect size. Instead, we used a box-score method [30]. This involved assessing and tabulating the relationship between each continuity measure and each outcome in each study. These relationships were recorded as either positive, negative or non-significant (using a conventional p value of < 0.05 as our cut off for significance). Advantages and disadvantages of this method are explored in the discussion section. Where a study used both bivariate analysis and multivariate analysis, the results from the multivariate analysis were extracted. Results were marked as “mixed” where more than one measure for an outcome was used and the significance/direction differed between outcome measures. Sensitivity analysis of study quality and size was carried out.

Results

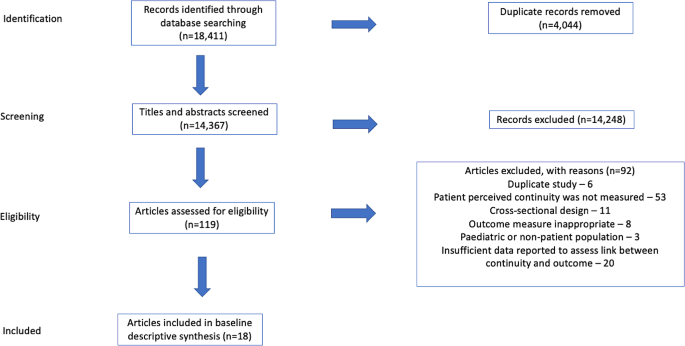

Figure 1 shows the search results and number of inclusions/exclusions. Studies were excluded for a number of reasons including; having inappropriate outcome measures [31], focusing on non-adult patient populations [32] and reporting insufficient data to examine the relationship between continuity and outcomes [33]. All studies are described in Table 2.

Study settings

Studies took place in 9 different, mostly economically developed, countries. Studies were set in primary care [5], hospital/specialist outpatient [7], hospital in-patient [5], or the general population [2].

Study design and assessment of bias

All included studies, apart from one trial [34], were cohort studies. Study duration varied from 2 months to 5 years. Most studies were rated as being low-moderate or moderate risk of bias, due to outcomes being patient reported, issues with recruitment, inadequately describing cohort populations, significant rates of attrition and/or failure to account for patients lost to follow up.

Measurement of continuity

The majority of the studies (15/19) measured continuity using a validated, multifactorial patient reported measure of continuity or using the continuity/co-ordination subscale of another validated instrument. Two studies placed patients into discrete groups of continuity based on answers to pre-defined questions (e.g., do you have a regular GP that you see? ) [35, 36], one used a bespoke questionnaire [34], and one calculated an administrative measure of continuity (UPC – Usual Provider of Care index) using patient reported visit data collected from patient interviews [37]. Ten studies reported more than one type of patient reported continuity, four reported relational continuity, three reported overall continuity, one informational continuity and one management continuity.

Study outcomes

Most of the studies reported more than one outcome measure. To enable comparison across studies we grouped the most common outcome measures together. These were quality of life (n = 11), self-reported health status (n = 8), emergency department use or hospitalisation (n = 7), and mortality (n = 4). Other outcomes reported included physiological parameters e.g., blood pressure or blood test parameters (n = 2) [36, 38] and other indicators of functioning or well-being (n = 6).

Association between outcomes and continuity measures

Twelve of the nineteen studies demonstrated at least one statistically significant association between at least one patient reported measure of continuity and at least one outcome. However, ten of these studies examined more than one outcome measure. Two of these significant studies showed negative findings; better informational continuity was associated with worse self-reported disease status [35] and improved continuity was related to increased admissions and ED use [39]. Four studies demonstrated no association between measures of continuity and any health outcomes.

The four most commonly reported types of outcomes were analysed separately (Table 3). All the outcomes had a majority of studies showing no significant association with continuity or a mixed/unclear association. Sensitivity analysis of the results in Table 3, excluding high and moderate-high risk studies, did not change this finding. Each of these outcomes were also examined in relation to the type of continuity that was measured (Table 4) Apart from the relationship between informational continuity and quality or life, all other combinations of continuity type/outcome had a majority of studies showing no significant association with continuity or a mixed/unclear association. However, the relationship between informational continuity and quality of life was only examined in two separate studies [40, 41]. One of these studies contained less than 100 patients and was removed when sensitivity analysis of study size was carried out [40]. Sensitivity analysis of the results in Table 4, excluding high and moderate-high risk studies, did not change the findings.

Two sensitivity analyses were carried out (a) removing all studies with less than 100 participants and (b) those with less than 1000 participants. There were only five studies with at least 1000 participants. These all showed at least one positive association between continuity and health outcome. Of note, three of these five studies examined emergency department use/readmissions and all three found a significant positive association.

Discussion

Continuity of care is a multi-dimensional concept that is often linked to positive health outcomes. There is strong evidence that administrative measures of continuity are associated with improved health outcomes including a reduction in mortality, healthcare costs and utilisation of healthcare [3, 18, 19]. Our interpretation of the evidence in this review is that there is an emerging link between patient reported continuity and health outcomes. Most studies in the review contained at least one significant association between continuity and a health outcome. However, when outcome measures were examined individually, the findings were less consistent.

The evidence for a link between patient reported continuity is not as strong as that for administrative measures. There are several possible explanations for this. The review retrieved a relatively small number of studies that examined a range of different outcomes, in different patient populations, in different settings, using different outcomes, and different measures of continuity. This resulted in small numbers of studies examining the relationship of a particular measure of continuity with a particular outcome (Table 4). The studies in the review took place in a wide variety of country and healthcare settings and it may be that the effects of continuity vary in different contexts. Finally, in comparison to studies of administrative measures of continuity, the studies in this review were small: the median number of participants in the studies was 486, compared to 39,249 in a recent systematic review examining administrative measures of continuity [18]. Smaller studies are less able to detect small effect sizes and this may be the principle reason for the difference between the results of this review and previous reviews of administrative measures of continuity. When studies with less than 1000 participants were excluded, all remaining studies showed at least one positive finding and there was a consistent association between reduction in emergency department use/re-admissions and continuity. This suggests that a modest association between certain outcomes and patient reported continuity may be present but, due to effect size, larger studies are needed to demonstrate it. The box score method does not take account of differential size of studies.

Continuity is not a concept that is universally agreed upon. We mapped concepts of continuity onto the commonly used Haggerty framework [11]. Apart from the use of the Nijmegen Continuity of care questionnaire in three studies [42], all studies measured continuity using different methods and concepts of continuity. We could have used other theoretical constructs of continuity for the mapping of measures. It was not possible to find the exact questions asked of patients in every study. We therefore mapped several of the continuity measures based on higher level descriptions given by the authors. The diversity of patient measures may account for some of the variability in findings between studies. However, it may be that the nature of continuity captured by patient reported measures is less closely linked to health outcomes than that captured by administrative measures. Administrative measures capture the pattern of interactions between patients and clinicians. All studies in this review (apart from Study 18) use PREMs that attempt to capture something different to the pattern in which a patient sees a clinician. Depending on the specific measure used, this includes: aspects of information transfer between services, how joined up care was between different providers and the nature of the patient-clinician relationship. PREMs can only capture what the patient perceives and remembers. The experience of continuity for the patient is important in its own right. However, it may be that the aspects of continuity that are most linked to positive health outcomes are best reflected by administrative measures. Sidaway-Lee et al. have hypothesised why relational continuity may be linked to health outcomes [43]. This includes the ability for a clinician to think more holistically and the motivation to “go the extra mile” for a patient. Whilst these are difficult to measure directly, it may be that administrative measures are a better proxy marker than PREMs for these aspects of continuity.

Conclusions/future work

This review shows a potential emerging relationship between patient reported continuity and health outcomes. However, the evidence for this association is currently weaker than that demonstrated in previous reviews of administrative measures of continuity.

If continuity is to be measured and improved, as is being proposed in some health systems [44], these findings have potential implications as to what type of measure we should use. Measurement of health system performance often drives change [45]. Health systems may respond to calls to improve continuity differently, depending on how continuity is measured. Continuity PREMs are important and patient experienced continuity should be a goal in its own right. However, it is the fact that continuity is linked to multiple positive health care and health system outcomes that is often given as the reason for pursing it as a goal [8, 44, 46]. Whilst this review shows there is emerging evidence of a link, it is not as strong as that found in studies of administrative measures. If, as has been shown in other work, PREMS and administrative measures are looking at different things [23, 24], we need to choose our measures of continuity carefully.

Larger studies are required to confirm the emerging link between patient experienced continuity and outcomes shown in this paper. Future studies, where possible, should collect both administrative and patient reported measures of continuity and seek to understand the relative importance of the three different aspects of continuity (relational, informational, managerial). The relationship between patient experienced continuity and outcomes is likely to vary between different groups and future work should examine differential effects in different patient populations There are now several validated measures of patient experienced continuity [17, 20, 21, 42]. Whilst there may be an argument more should be developed, the use of a standardised questionnaire (such as the Nijmegen questionnaire) where possible, would enable closer comparison between patient experiences in different healthcare settings.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Gray DJP, Sidaway-Lee K, White E, Thorne A, Evans PH. Continuity of care with doctors - a matter of life and death? A systematic review of continuity of care and mortality. BMJ Open. 2018;8(6):1–12.

Barker I, Steventon A, Deeny SR. Association between continuity of care in general practice and hospital admissions for ambulatory care sensitive conditions: cross sectional study of routinely collected, person level data. BMJ Online. 2017;356.

Bazemore A, Merenstein Z, Handler L, Saultz JW. The impact of interpersonal continuity of primary care on health care costs and use: a critical review. Ann Fam Med. 2023;21(3):274–9.

Palmer W, Hemmings N, Rosen R, Keeble E, Williams S, Imison C. Improving access and continuity in general practice. The Nuffield Trust; 2018 [cited 2022 Jan 15]. https://www.nuffieldtrust.org.uk/research/improving-access-and-continuity-in-general-practice

Pettigrew LM, Kumpunen S, Rosen R, Posaner R, Mays N. Lessons for ‘large-scale’ general practice provider organisations in England from other inter-organisational healthcare collaborations. Health Policy. 2019;123(1):51–61.

Glenister KM, Guymer J, Bourke L, Simmons D. Characteristics of patients who access zero, one or multiple general practices and reasons for their choices: a study in regional Australia. BMC Fam Pract. 2021;22(1):2.

Kringos D, Boerma W, Bourgueil Y, Cartier T, Dedeu T, Hasvold T, et al. The strength of primary care in Europe: an international comparative study. Br J Gen Pract. 2013;63(616):e742–50.

Salisbury H. Helen Salisbury: everyone benefits from continuity of care. BMJ. 2023;382:p1870.

Gray DP, Sidaway-Lee K, Johns C, Rickenbach M, Evans PH. Can general practice still provide meaningful continuity of care? BMJ. 2023;383:e074584.

Ladds E, Greenhalgh T. Modernising continuity: a new conceptual framework. Br J Gen Pr. 2023;73(731):246–8.

Haggerty JL, Reid, Robert, Freeman G, Starfield B, Adair CE, McKendry R. Continuity of care: a multidisciplinary review. BMJ. 2003;327(7425):1219–21.

Freeman G, Shepperd S, Robinson I, Ehrich K, Richards S, Pitman P et al. Continuity of care continuity of care report of a scoping exercise for the national co-ordinating centre for NHS service delivery and organisation R & D. 2001 [cited 2020 Oct 15]. https://njl-admin.nihr.ac.uk/document/download/2027166

Saultz JW. Defining and measuring interpersonal continuity of care. Ann Fam Med. 2003;1(3):134–43.

Uijen AA, Schers HJ, Schellevis FG. Van den bosch WJHM. How unique is continuity of care? A review of continuity and related concepts. Fam Pract. 2012;29(3):264–71.

Murphy M, Salisbury C. Relational continuity and patients’ perception of GP trust and respect: a qualitative study. Br J Gen Pr. 2020;70(698):e676–83.

Gray DP, Sidaway-Lee K, Whitaker P, Evans P. Which methods are most practicable for measuring continuity within general practices? Br J Gen Pract. 2023;73(731):279–82.

Uijen AA, Schers HJ. Which questionnaire to use when measuring continuity of care. J Clin Epidemiol. 2012;65(5):577–8.

Baker R, Bankart MJ, Freeman GK, Haggerty JL, Nockels KH. Primary medical care continuity and patient mortality. Br J Gen Pr. 2020;70(698):E600–11.

Van Walraven C, Oake N, Jennings A, Forster AJ. The association between continuity of care and outcomes: a systematic and critical review. J Eval Clin Pr. 2010;16(5):947–56.

Aller MB, Vargas I, Garcia-Subirats I, Coderch J, Colomés L, Llopart JR, et al. A tool for assessing continuity of care across care levels: an extended psychometric validation of the CCAENA questionnaire. Int J Integr Care. 2013;13(OCT/DEC):1–11.

Haggerty JL, Roberge D, Freeman GK, Beaulieu C, Bréton M. Validation of a generic measure of continuity of care: when patients encounter several clinicians. Ann Fam Med. 2012;10(5):443–51.

Bentler SE, Morgan RO, Virnig BA, Wolinsky FD, Hernandez-Boussard T. The association of longitudinal and interpersonal continuity of care with emergency department use, hospitalization, and mortality among medicare beneficiaries. PLoS ONE. 2014;9(12):1–18.

Bentler SE, Morgan RO, Virnig BA, Wolinsky FD. Do claims-based continuity of care measures reflect the patient perspective? Med Care Res Rev. 2014;71(2):156–73.

Rodriguez HP, Marshall RE, Rogers WH, Safran DG. Primary care physician visit continuity: a comparison of patient-reported and administratively derived measures. J Gen Intern Med. 2008;23(9):1499–502.

Adler R, Vasiliadis A, Bickell N. The relationship between continuity and patient satisfaction: a systematic review. Fam Pr. 2010;27(2):171–8.

Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(6):573–6.

Althubaiti A. Information bias in health research: definition, pitfalls, and adjustment methods. J Multidiscip Healthc. 2016;9:211–7.

Schultz EM, McDonald KM. What is care coordination? Int J Care Coord. 2014;17(1–2):5–24.

Hayden, van der Windt, Danielle, Cartwright, Jennifer, Cote, Pierre, Bombardier, Claire. Assessing Bias in studies of prognostic factors. Ann Intern Med. 2013;158(4):280–6.

Green BF, Hall JA. Quantitative methods for literature reviews. Annu Rev Psychol. 1984;35(1):37–54.

Safran DG, Montgomery JE, Chang H, Murphy J, Rogers WH. Switching doctors: predictors of voluntary disenrollment from a primary physician’s practice. J Fam Pract. 2001;50(2):130–6.

Burns T, Catty J, Harvey K, White S, Jones IR, McLaren S, et al. Continuity of care for carers of people with severe mental illness: results of a longitudinal study. Int J Soc Psychiatry. 2013;59(7):663–70.

Engelhardt JB, Rizzo VM, Della Penna RD, Feigenbaum PA, Kirkland KA, Nicholson JS, et al. Effectiveness of care coordination and health counseling in advancing illness. Am J Manag Care. 2009;15(11):817–25.

Uijen AA, Bischoff EWMA, Schellevis FG, Bor HHJ, Van Den Bosch WJHM, Schers HJ. Continuity in different care modes and its relationship to quality of life: a randomised controlled trial in patients with COPD. Br J Gen Pr. 2012;62(599):422–8.

Humphries C, Jaganathan S, Panniyammakal J, Singh S, Dorairaj P, Price M, et al. Investigating discharge communication for chronic disease patients in three hospitals in India. PLoS ONE. 2020;15(4):1–20.

Konrad TR, Howard DL, Edwards LJ, Ivanova A, Carey TS. Physician-patient racial concordance, continuity of care, and patterns of care for hypertension. Am J Public Health. 2005;95(12):2186–90.

Van Walraven C, Taljaard M, Etchells E, Bell CM, Stiell IG, Zarnke K, et al. The independent association of provider and information continuity on outcomes after hospital discharge: implications for hospitalists. J Hosp Med. 2010;5(7):398–405.

Gulliford MC, Naithani S, Morgan M. Continuity of care and intermediate outcomes of type 2 diabetes mellitus. Fam Pr. 2007;24(3):245–51.

Kaneko M, Aoki T, Mori H, Ohta R, Matsuzawa H, Shimabukuro A, et al. Associations of patient experience in primary care with hospitalizations and emergency department visits on isolated islands: a prospective cohort study. J Rural Health. 2019;35(4):498–505.

Beesley VL, Janda M, Burmeister EA, Goldstein D, Gooden H, Merrett ND, et al. Association between pancreatic cancer patients’ perception of their care coordination and patient-reported and survival outcomes. Palliat Support Care. 2018;16(5):534–43.

Valaker I, Fridlund B, Wentzel-Larsen T, Nordrehaug JE, Rotevatn S, Råholm MB, et al. Continuity of care and its associations with self-reported health, clinical characteristics and follow-up services after percutaneous coronary intervention. BMC Health Serv Res. 2020;20(1):1–15.

Uijen AA, Schellevis FG, Van Den Bosch WJHM, Mokkink HGA, Van Weel C, Schers HJ. Nijmegen continuity questionnaire: development and testing of a questionnaire that measures continuity of care. J Clin Epidemiol. 2011;64(12):1391–9.

Sidaway-Lee K, Gray DP, Evans P, Harding A. What mechanisms could link GP relational continuity to patient outcomes ? Br J Gen Pr. 2021;(June):278–81.

House of Commons Health and Social Care Committee. The future of general practice. 2022. https://publications.parliament.uk/pa/cm5803/cmselect/cmhealth/113/report.html

Close J, Byng R, Valderas JM, Britten N, Lloyd H. Quality after the QOF? Before dismantling it, we need a redefined measure of ‘quality’. Br J Gen Pract. 2018;68(672):314–5.

Gray DJP. Continuity of care in general practice. BMJ. 2017;356:j84.

Acknowledgements

Not applicable.

Funding

Patrick Burch carried this work out as part of a PhD Fellowship funded by THIS Institute.

Author information

Authors and Affiliations

Contributions

PBu conceived the review and performed the searches. PBu, AW and SS performed the paper selections, reviews and data abstractions. PBo helped with the design of the review and was inovlved the reviewer disputes. All authors contributed towards the drafting of the final manuscript.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Burch, P., Walter, A., Stewart, S. et al. Patient reported measures of continuity of care and health outcomes: a systematic review. BMC Prim. Care 25, 309 (2024). https://doi.org/10.1186/s12875-024-02545-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12875-024-02545-8